Expression localization is a fundamental step in micro expression (ME) analysis. Most micro expression videos collected to date feature participants with relatively still heads and short durations. Such short videos rarely include other head movements, and environmental factors like lighting changes can often be disregarded. Therefore, micro expressions are more prominent in short videos and are easier to detect.

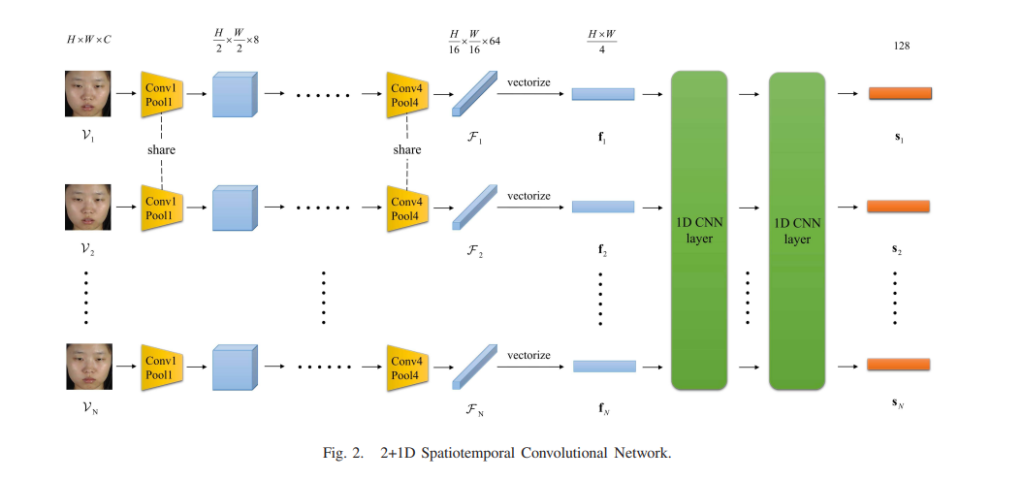

This study proposes a novel convolutional neural network (CNN)-based approach for identifying multi-scale spontaneous micro expression intervals in long videos. We name this network the Micro-Expression Spotting Network (MESNet). It consists of three modules. The first module is a 2+1D spatio-temporal convolution network that uses 2D convolution to extract spatial features and 1D convolution to extract temporal features. The second module is a Clip Proposal Network that generates suggested micro expression clips. The final module is a Classification Regression Network that classifies these proposed clips as containing micro expressions or not, and further refines their temporal boundaries.

Additionally, the paper introduces a new evaluation metric for detecting micro expressions. Extensive experiments were conducted on the CAS(ME)2 and SAMM long video datasets, using leave-one-subject-out cross-validation to assess localization performance. The results demonstrate that the proposed MESNet significantly enhances the F1-score metric. Comparative results show that MESNet performs well, surpassing other state-of-the-art methods.

In summary, this study not only introduces a CNN-based method for detecting multi-scale micro expression intervals in long videos but also addresses issues of small sample size and sample imbalance with several specialized techniques. Furthermore, it proposes a new evaluation metric for micro expression detection.

Image source:MESNet: A Convolutional Neural Network for Spotting Multi-Scale Micro-Expression Intervals in Long Video